|

Demonstrations

| | D01 A Real Space Application by Visual Marker using Computer Displays |

| | |

Yasue Kishino, Masahiko Tsukamoto (Osaka University), Yutaka Sakane

(Shizuoka University), Shojiro Nishio (Osaka University)

|

|

| |

To construct augmented reality applications with a camera, it is

necessary to determine the concise location and the direction of the

camera, i.e., a user $B!G (Bs view, in which virtual objects are composed.

Recently, there have been extensive researches based on video analysis

to achieve this. In these approaches, paper-printed markers or LED

markers are typically placed in the real world, and users capture the

image of these markers to obtain their information. However, recent

trends in ubiquitous computing have required more dynamic and

interactive markers. In this research, we propose a new location marking

method, called VCC (Visual Computer Communication), which uses computer

displays to show markers and send information.

In our method, a marker in a matrix shape keeps blinking to provide both coordinate information and attached information such as an address or a

URL. We will demonstrate how a laptop PC with a CCD camera detect the

VCC marker, recognize the information, and overlay recognized

information or open a Web page pointed by the recognized URL. Since we

assume in the future environment there should be many ubiquitous

displays anywhere in the real world, our system can be used by users

walking around with their mobile computers equipped with cameras to

obtain various information and services.

To construct augmented reality applications with a camera, it is

necessary to determine the concise location and the direction of the

camera, i.e., a user $B!G (Bs view, in which virtual objects are composed.

Recently, there have been extensive researches based on video analysis

to achieve this. In these approaches, paper-printed markers or LED

markers are typically placed in the real world, and users capture the

image of these markers to obtain their information. However, recent

trends in ubiquitous computing have required more dynamic and

interactive markers. In this research, we propose a new location marking

method, called VCC (Visual Computer Communication), which uses computer

displays to show markers and send information.

In our method, a marker in a matrix shape keeps blinking to provide both coordinate information and attached information such as an address or a

URL. We will demonstrate how a laptop PC with a CCD camera detect the

VCC marker, recognize the information, and overlay recognized

information or open a Web page pointed by the recognized URL. Since we

assume in the future environment there should be many ubiquitous

displays anywhere in the real world, our system can be used by users

walking around with their mobile computers equipped with cameras to

obtain various information and services.

|

| | D02 The Uber-Badge |

| | |

Joe Paradiso (MIT Media Lab, USA)

|

|

| |

We present the design of a new badge platform for facilitating interaction in large groups of people. We have built this device to be very flexible in order to host a wide variety of interactions in the areas where wearable and social computing converge, from game environments to meetings and conventions. This badge has both RF and IR communication, a 5x9 LED display capable of presenting graphics and scrolling text that users in the vicinity can read, an onboard microphone for 12-bit audio sampling, a 12-bit audio output, a pager motor vibrator for vibratory feedback, 3 onboard processors, capacity for up to 256 MB of flash memory, provisions for connecting LCD displays, and connectors that mate into the Responsive Environments Group's Stack Sensor platform, allowing a variety of different sensors to be integrated. We describe several applications now being developed for this badge at the MIT Media Laboratory, and touch on how it was used in a multiplayer, augmented reality urban adventure hunt game in Manhattan in the summer of 2003.

We present the design of a new badge platform for facilitating interaction in large groups of people. We have built this device to be very flexible in order to host a wide variety of interactions in the areas where wearable and social computing converge, from game environments to meetings and conventions. This badge has both RF and IR communication, a 5x9 LED display capable of presenting graphics and scrolling text that users in the vicinity can read, an onboard microphone for 12-bit audio sampling, a 12-bit audio output, a pager motor vibrator for vibratory feedback, 3 onboard processors, capacity for up to 256 MB of flash memory, provisions for connecting LCD displays, and connectors that mate into the Responsive Environments Group's Stack Sensor platform, allowing a variety of different sensors to be integrated. We describe several applications now being developed for this badge at the MIT Media Laboratory, and touch on how it was used in a multiplayer, augmented reality urban adventure hunt game in Manhattan in the summer of 2003.

|

| | D03: ensemble: clothes, sensors and sound |

| | |

Kristina Andersen, STEIM, The Netherlands

|

|

| |

"ensemble" is a suitcase full of music making clothes designed for

children. Each piece of clothing uses sensors to modify a sound or

voice. The position of a hat, the swoosh of a dress, the darkness of a

ladies bag...

The project is developed as an exploration of using embedded wireless

sensors as tangible sonic objects and making them available to

pre-school children. By observing how they spontaneously explore and

interpret them, we aim at capturing their emerging understanding of the

causalities of electronic sensing.

Seven garments are fitted with wireless sensors that control sound

samples and their modifiers in real time. Each garment acts as carriers

both for the sensors and the wireless system. The sensors are separated

by type and placed in the garments in such a way that the function of

the sensor is conceptually supported by the form-factors of the garment.

The dress holds an accelerometer, the hat tilt switches etc. The

garments are using hacked and modified game-pads as wireless signal

carriers.

A number of ensemble garments will be demoed along with two pieces of

STEIM software: junXion and LiSa.

"ensemble" is a suitcase full of music making clothes designed for

children. Each piece of clothing uses sensors to modify a sound or

voice. The position of a hat, the swoosh of a dress, the darkness of a

ladies bag...

The project is developed as an exploration of using embedded wireless

sensors as tangible sonic objects and making them available to

pre-school children. By observing how they spontaneously explore and

interpret them, we aim at capturing their emerging understanding of the

causalities of electronic sensing.

Seven garments are fitted with wireless sensors that control sound

samples and their modifiers in real time. Each garment acts as carriers

both for the sensors and the wireless system. The sensors are separated

by type and placed in the garments in such a way that the function of

the sensor is conceptually supported by the form-factors of the garment.

The dress holds an accelerometer, the hat tilt switches etc. The

garments are using hacked and modified game-pads as wireless signal

carriers.

A number of ensemble garments will be demoed along with two pieces of

STEIM software: junXion and LiSa.

|

| | D04: Microservices: A Lightweight Web Service Infrastructure for Mobile Devices |

| | |

Nicholas Nicoloudis, School of Computer Science and Software Engineering, Monash University Australia

|

|

| |

Our Microservices framework was developed to enable communication

amongst various web enabled mobile devices. The Microservices

framework facilitates peer-to-peer communication based

upon the architecture independent web services standard.

Interoperability is essential to enable communication

between mobile and assorted web enabled devices. Our framework

referred to as Microservices consists of two key areas;

the first includes a lightweight version of the web service

architecture. The second includes the development of a

compact and lightweight component-based web server capable

of supporting Microservices and a range of other internet

standards. In developing the web server we have taken

into consideration that the functionality supported and

resource requirements should not be as lightweight and

minimalist as existing embedded web servers. The reason

being is that mobile devices are at the centre of the

scale when it comes to hardware resource availability.

Several limitations are imposed in implementing the web

service framework for the mobile device. These limitations

are due to the constraints of available resources in comparison

to desktop systems. However, the framework remains compatible

with the original architecture, since it merely imposes

certain restrictions as opposed to a complete overhaul

of the underlying architecture.

Our Microservices framework was developed to enable communication

amongst various web enabled mobile devices. The Microservices

framework facilitates peer-to-peer communication based

upon the architecture independent web services standard.

Interoperability is essential to enable communication

between mobile and assorted web enabled devices. Our framework

referred to as Microservices consists of two key areas;

the first includes a lightweight version of the web service

architecture. The second includes the development of a

compact and lightweight component-based web server capable

of supporting Microservices and a range of other internet

standards. In developing the web server we have taken

into consideration that the functionality supported and

resource requirements should not be as lightweight and

minimalist as existing embedded web servers. The reason

being is that mobile devices are at the centre of the

scale when it comes to hardware resource availability.

Several limitations are imposed in implementing the web

service framework for the mobile device. These limitations

are due to the constraints of available resources in comparison

to desktop systems. However, the framework remains compatible

with the original architecture, since it merely imposes

certain restrictions as opposed to a complete overhaul

of the underlying architecture. |

| |

D05: Event-Triggered

SMS-Based Notification Services |

| |

|

Alois Ferscha (Johannes Kepler

University Linz, Austria) |

|

| |

Short message services, initially designed for text communication

between two mobile phones, are nowadays used in many other

applications, including ordering of services and goods,

mobile payment, or delivery of news. We have designed,

implemented, and successfully deployed in real life an

SMS based notification system supporting push and pull

services for real time querying in sport events using

RFID for time keeping. Participants in the sport event,

e.g. runners or bikers, are equipped with RFID chips which

omit a unique ID to be captured by an RFID reader. Typically

such readers are positioned at the start and end of the

race, as well as on predefined control points for time

keeping. Most sport events offer internet based tools

to query race results. However, spectators of the event

would also be interested in querying and receiving intermediate

and final results in real time while watching the event,

where typically internet access cannot easily be provided.

This was our motivation for offering mobile SMS based

push and pull services. In the push service, users register

themselves for the race number of their choice giving

the mobile phone number where the results should be delivered.

If the race participant crosses the reader, the RFID signal

is captured and triggers not only the time keeping functions

but also queries a database for any registered delivery

requests. If requests are found, the time keeping data

are transferred into SMS format and delivered to the registered

number(s) via an SMS gateway provided by our partner ONE.

Compared to “traditional” internet based queries,

users benefit from the advantage of (nearly) world wide

coverage and from instant delivery of results triggered

by the event of interest itself. In the pull service,

the user would send an SMS with the contestants´

race number and would immediately receive the most current

results (e.g. half distance timings or at least the name

of the participant) available. The Vienna City Marathon

was the first sports event offering this notification

service. More than 10.000 messages have been delivered

via the push and the pull service to world wide destinations

at the day of the race.

Short message services, initially designed for text communication

between two mobile phones, are nowadays used in many other

applications, including ordering of services and goods,

mobile payment, or delivery of news. We have designed,

implemented, and successfully deployed in real life an

SMS based notification system supporting push and pull

services for real time querying in sport events using

RFID for time keeping. Participants in the sport event,

e.g. runners or bikers, are equipped with RFID chips which

omit a unique ID to be captured by an RFID reader. Typically

such readers are positioned at the start and end of the

race, as well as on predefined control points for time

keeping. Most sport events offer internet based tools

to query race results. However, spectators of the event

would also be interested in querying and receiving intermediate

and final results in real time while watching the event,

where typically internet access cannot easily be provided.

This was our motivation for offering mobile SMS based

push and pull services. In the push service, users register

themselves for the race number of their choice giving

the mobile phone number where the results should be delivered.

If the race participant crosses the reader, the RFID signal

is captured and triggers not only the time keeping functions

but also queries a database for any registered delivery

requests. If requests are found, the time keeping data

are transferred into SMS format and delivered to the registered

number(s) via an SMS gateway provided by our partner ONE.

Compared to “traditional” internet based queries,

users benefit from the advantage of (nearly) world wide

coverage and from instant delivery of results triggered

by the event of interest itself. In the pull service,

the user would send an SMS with the contestants´

race number and would immediately receive the most current

results (e.g. half distance timings or at least the name

of the participant) available. The Vienna City Marathon

was the first sports event offering this notification

service. More than 10.000 messages have been delivered

via the push and the pull service to world wide destinations

at the day of the race. |

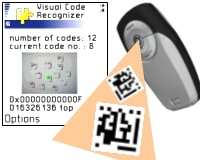

| | D06: Visual Code Recognition for Camera-Equipped Mobile Phones |

| | |

Michael Rohs and Beat Gfeller,

Institute for Pervasive Computing,

Department of Computer Science,

Swiss Federal Institute of Technology (ETH) Zurich,

Switzerland

|

|

| |

This demo illustrates how interaction with mobile phones can be enhanced

by using 2-dimensional visual codes. We present a visual code system for

camera-equipped mobile phones and a show number of example applications.

This demo illustrates how interaction with mobile phones can be enhanced

by using 2-dimensional visual codes. We present a visual code system for

camera-equipped mobile phones and a show number of example applications.

Even though the computing power of current mobile phones is limited and

the image quality of the cameras is comparatively poor, such devices can

act as mobile sensors for 2-dimensional visual codes. The codes we have

developed can be displayed on electronic screens, projected with a

beamer, printed on paper documents, or attached to physical objects.

They act as a key to access object-related information and

functionality.

The ability to detect objects in the user's vicinity offers a natural

way of interaction and strengthens the role of mobile phones in a large

number of application scenarios. Mobile phones are in constant reach of

their users, are thus available in everyday situations, and provide

continuous wireless connectivity. They are therefore suitable to act as

the user's "bridge" between physical entities in the real world and

associated entities in the virtual world.

The visual code system is designed for low-quality images and uses a

lightweight recognition algorithm. It allows the simultaneous detection

of multiple codes, introduces a position-independent code coordinate

system, and provides the phone's orientation as a parameter.

|

| | D07: Improving the Reality Perception of Visually Impaired |

| | |

Vlad Coroama, Institute for Pervasive Computing, ETH Zürich,

Felix Röthenbacher, Christoph Plüss, ETH Zürich

|

|

| |

The visually impaired experience serious difficulties in leading an independent life. Particularly in unknown environments (foreign cities, large airport terminals) they rely on external assistance. But even ordinary tasks such as the daily shopping in the supermarket are hard to be performed independently. It is virtually impossible to distinguish between the thousands supermarket-products with other senses than the sight. The common cause for these problems is the lack of information the visually impaired have about their immediate surroundings.

The visually impaired experience serious difficulties in leading an independent life. Particularly in unknown environments (foreign cities, large airport terminals) they rely on external assistance. But even ordinary tasks such as the daily shopping in the supermarket are hard to be performed independently. It is virtually impossible to distinguish between the thousands supermarket-products with other senses than the sight. The common cause for these problems is the lack of information the visually impaired have about their immediate surroundings.

In this demo, we show the prototype of the Chatty Environment, a ubiquitous computing system designed to help the visually impaired to better understand their neighborhoods. The tagged objects in the chatty environment reveal their existence to the user through an audio interface, when he comes in their vicinity. The user can then interact with these entities, getting more information about their attributes or even perform small actions on them.

By supporting two complementary tagging methods, the system tries to map the way sighted people perceive the world. Large and important objects can be detected from a distance, as they advertise themselves to the user. For small supermarket-like items a different paradigm is used: the user has to explicitly pick up an object to begin interaction.

|

|